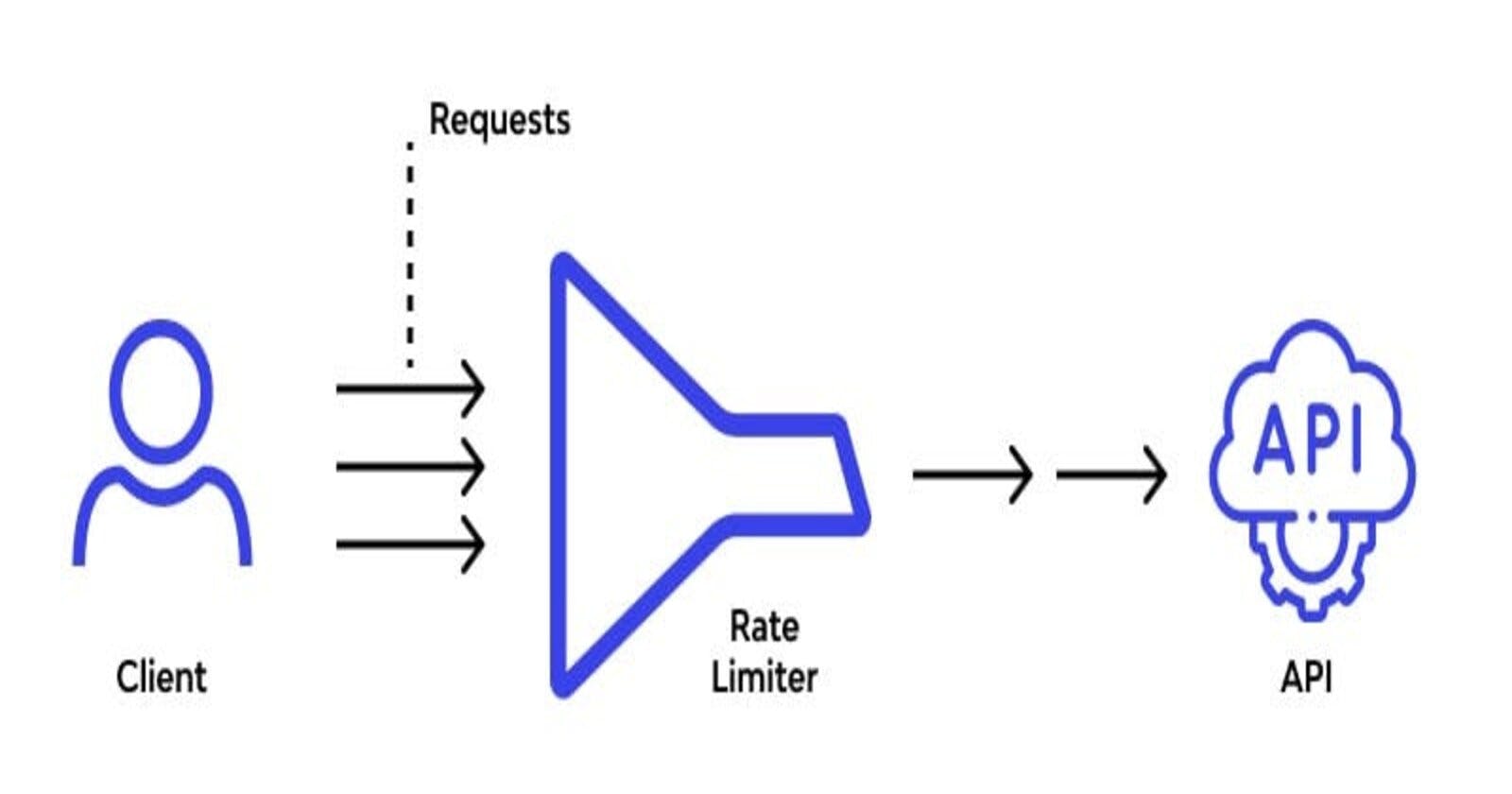

In the realm of APIs and distributed systems, ensuring fair usage and preventing abuse is paramount. Rate limiting, a technique to control the rate of incoming requests, plays a vital role in maintaining system stability and optimizing resource utilization.

Among rate limiting algorithms, the token bucket algorithm stands out for its simplicity and effectiveness. In this blog post, we'll explore how to implement rate limiting using the token bucket algorithm in a Node.js application with Express.

Let's delve into the implementation and learn how to enforce rate limiting effectively while maintaining the flexibility and scalability of Express applications.

Token Bucket Algorithm

Before diving into the implementation, let's understand the token bucket algorithm. The token bucket algorithm operates on a simple principle: imagine a bucket that holds tokens. Tokens are added to this bucket at a fixed rate, and each incoming request must consume a token from the bucket. If there are no tokens available when a request arrives, it is either delayed or rejected.

Implementation

Now, let's break down the implementation of rate limiting using the token bucket algorithm in our Express application:

Part 1: Setting up Express

Let's start by setting up our Express application. We first import the Express framework.

npm init -y

npm i express

Now ,let us initialize an instance of it, and define the port on which our server will listen for incoming requests:

const express = require('express');

const app = express();

const port = 8080;

Part 2: Token Bucket Initialization

In this part, we initialize a Map object named tokenBucket to store tokens per IP address. Each IP address corresponds to a token bucket, which will be used for rate limiting.

// Map to store tokens per IP address

const tokenBucket = new Map();

Part 3: Rate Limit Middleware

Here, we define a middleware function named rateLimit responsible for implementing the token bucket algorithm. It takes req (request), res (response), and next (callback) parameters. Inside this middleware, we'll implement the token bucket logic to enforce rate limiting.

// Middleware function to implement token bucket algorithm

const rateLimit = (req, res, next) => {

const ip = req.ip;

const capacity = 10; // Capacity of the token bucket

const fillRate = 1000; // Fill rate in ms (1 token per second)

// ...

};

Now, we implement the token bucket logic within the rateLimit middleware. We check if a token bucket exists for the IP address. If not, we create one with initial tokens and last update time.

// Create token bucket if it doesn't exist for the IP address

if (!tokenBucket.has(ip)) {

tokenBucket.set(ip, {

tokens: capacity,

lastUpdate: Date.now()

});

}

// ...

We then calculate the number of tokens in the bucket based on elapsed time since the last update and the fill rate. If there are enough tokens to handle the request, we decrement the token count and proceed to the next middleware. Otherwise, we send a 429 Too Many Requests response.

const bucket = tokenBucket.get(ip);

const now = Date.now();

const elapsedTime = now - bucket.lastUpdate;

const tokensToAdd = elapsedTime / fillRate;

bucket.tokens = Math.min(capacity, bucket.tokens + tokensToAdd);

bucket.lastUpdate = now;

// Check if there are enough tokens to handle the request

if (bucket.tokens >= 1) {

bucket.tokens -= 1;

next(); // Proceed to the next middleware

} else {

res.status(429).send('Too Many Requests'); // Return 429 status code

}

Part 4: Route Definitions

In this part, we define two routes: /limited and /unlimited. For the /limited route, we apply the rateLimit middleware to enforce rate limiting. The /unlimited route is not subject to rate limiting and serves as an example of a route without restrictions.

// Route for /limited with rate limiting middleware applied

app.get('/limited', rateLimit, (req, res) => {

res.send('Limited, don\'t overuse me!');

});

// Route for /unlimited without rate limiting

app.get('/unlimited', (req, res) => {

res.send('Unlimited! Let\'s Go!');

});

Part 5: Server Startup

Finally, we start the Express server and make it listen on the specified port. This ensures that our server is up and running, ready to handle incoming requests.

// Start the server

app.listen(port, () => {

console.log(`Server is listening at http://127.0.0.1:${port}`);

});

Now we can run this server using Node :

#node [file-name].js

node server.js

Here's a demo performance test for these endpoints :

Conclusion

In this blog post, we've explored how to implement rate limiting using the token bucket algorithm in a Node.js application with Express. We've gained a deeper understanding of how rate limiting works and how to implement it effectively. Rate limiting is a crucial technique for maintaining the stability and performance of APIs and distributed systems. Understanding and implementing it effectively is essential for ensuring the reliability and scalability of our applications. With this knowledge, you're well-equipped to implement rate limiting in your own Express applications. Cheers!